JasonPAtkins

Hot Rolled

- Joined

- Sep 30, 2010

- Location

- Guinea-Bissau, West Africa

Hey all,

This is a followup to a previous thread where I was using a transformer off-label by only using several taps of the secondary. The cliff notes from that thread were that I was seeing about a 600w no-load draw from a 3kva transformer, and the general consensus was that this was an unreasonably high number, and was probably because I was using a 60hz transformer in a 50hz system.

Well, I brought over (in my airline suitcase, no less) a 1.6(7.35)kva transformer which has the proper taps and is labeled for 50hz (50/60). I have hooked it up, hoping to have fixed the vampire draw problem, and am seeing the same results! I am feeding 230v 50hz single phase into the primary and pulling 120v 50hz out. The voltage is being transformed correctly. However, I'm still measuring almost 3 amps into the transformer under no load, which is about 600w. Please check my pics, but I believe I have it wired/jumpered correctly.

Pardon the mess of my temporary system. Here's how I have it hooked up. (I just put multimeter leads on the output.)

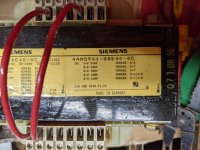

Here is closer pictures of the label:

Measurements:

I'm wondering if this is an actual problem or a measurement problem. Is there something peculiar about measuring the current into a transformer using a clamp meter? I ask because the system is being supplied by an off-grid solar inverter, and it is only reporting 200w of draw by the transformer, at the same moment that my Fluke is measuring 600w going from the inverter to the transformer. 200w (for an off-grid system that carefully watches draws) is still a lot 24/7 when for a device that won't be doing anything most of the time, but is less, though still significantly more than the ~1% of FLA that members here said to expect for an idling transformer.

Anyone have any insight here?

Thanks!

This is a followup to a previous thread where I was using a transformer off-label by only using several taps of the secondary. The cliff notes from that thread were that I was seeing about a 600w no-load draw from a 3kva transformer, and the general consensus was that this was an unreasonably high number, and was probably because I was using a 60hz transformer in a 50hz system.

Well, I brought over (in my airline suitcase, no less) a 1.6(7.35)kva transformer which has the proper taps and is labeled for 50hz (50/60). I have hooked it up, hoping to have fixed the vampire draw problem, and am seeing the same results! I am feeding 230v 50hz single phase into the primary and pulling 120v 50hz out. The voltage is being transformed correctly. However, I'm still measuring almost 3 amps into the transformer under no load, which is about 600w. Please check my pics, but I believe I have it wired/jumpered correctly.

Pardon the mess of my temporary system. Here's how I have it hooked up. (I just put multimeter leads on the output.)

Here is closer pictures of the label:

Measurements:

I'm wondering if this is an actual problem or a measurement problem. Is there something peculiar about measuring the current into a transformer using a clamp meter? I ask because the system is being supplied by an off-grid solar inverter, and it is only reporting 200w of draw by the transformer, at the same moment that my Fluke is measuring 600w going from the inverter to the transformer. 200w (for an off-grid system that carefully watches draws) is still a lot 24/7 when for a device that won't be doing anything most of the time, but is less, though still significantly more than the ~1% of FLA that members here said to expect for an idling transformer.

Anyone have any insight here?

Thanks!